This project was funded by Horizon Europe and the UTTER project.

About UTTER

Imagine exploring a museum or store and having a virtual guide that instantly speaks your language. That is the promise of UTTER, a Horizon Europe research and innovation project coordinated by the University of Amsterdam. UTTER, short for Unified Transcription and Translation for Extended Reality, is building the next wave of language technology for XR, including fast speech transcription, real-time translation and clear summaries of conversations. The aim is simple and powerful: make multilingual assistance work in real situations, with AI that understands context, tone and what people actually need in the moment.

ZAUBAR’s role

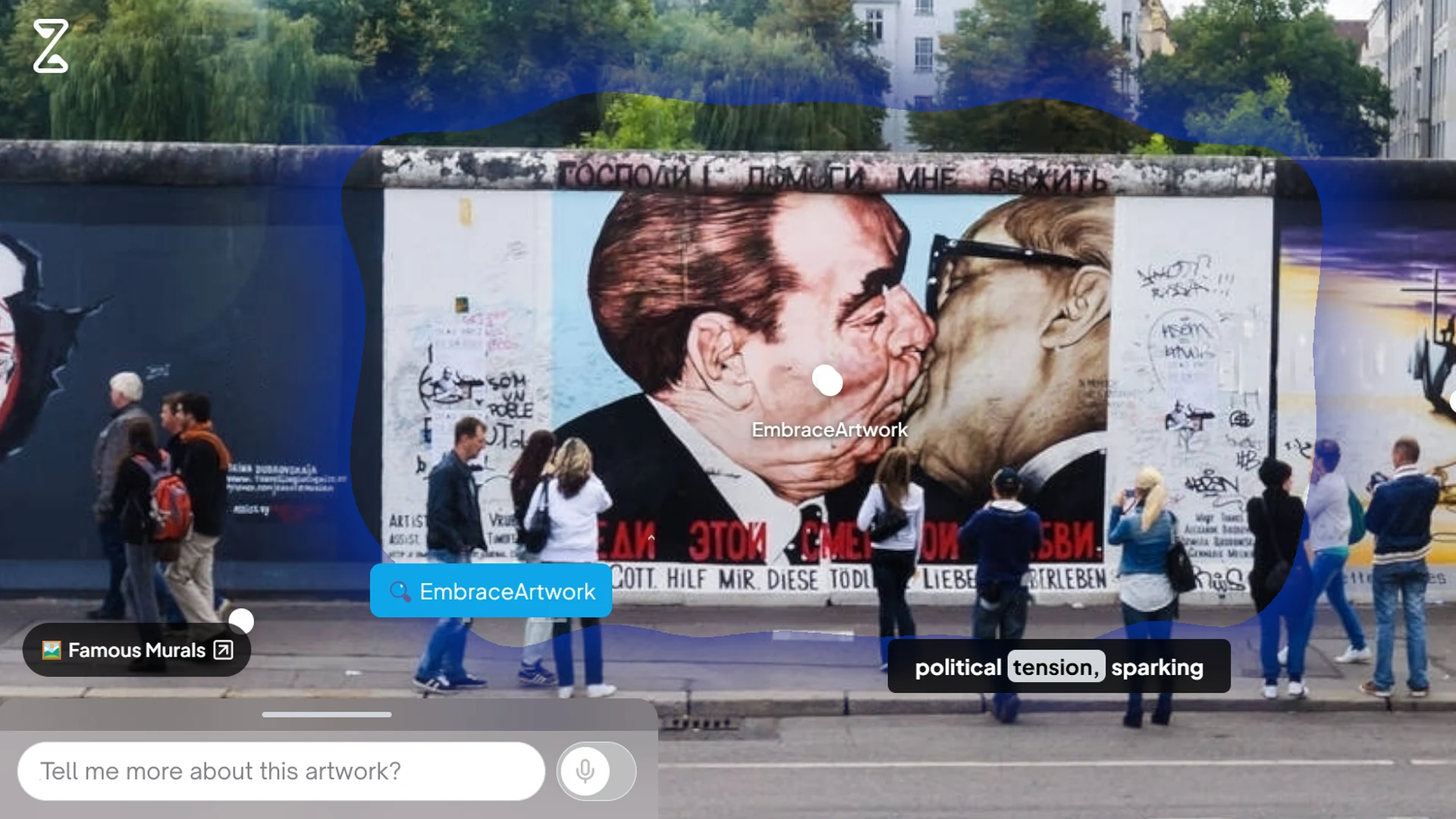

ZAUBAR joined the UTTER project to take its language capabilities out of the meeting room and into real places. Our pilot, VISIXR (Vision AI for XR), turns UTTER’s technology into an interactive experience with no app install required. Our agent responds to the content you are viewing, whether it is an image, a scene, a product page or any website, so you can ask in your language and get answers that fit the context. The agent can highlight the relevant area, speak back or show text, and follow up with a short recap so the important points stick.

What makes this different from a regular chatbot is the mix of language and vision. The agent knows what is on screen and responds in a way that suits the moment. Imagine a photo of the Berlin Wall mural: you can switch languages, choose a speaking style, ask for a quick overview or a deeper explanation, and tap hotspots to learn about specific details. You can move from “tell me more about this artwork” to “who painted it, when, and why it matters” without losing the thread. All of this is powered by a real-time pipeline for speech-to-text, intent understanding and text-to-speech, paired with image analysis that segments what you see so the agent can reference it precisely, and delivered through a Unity/WebGL front end for easy access in the browser.

For businesses and institutions, this opens practical doors. Brands and retailers can turn images and displays into interactive assistants that answer questions and compare features. It can draw from a company’s verified knowledge base, so you control the images, product data and FAQs it references and keep answers accurate and on brand. Museums and cultural sites can welcome international visitors with explanations in their own language. Trainers and educators can guide learners through complex visuals, with simple questions leading to the right level of detail. We are continuing to refine the experience and bring it into live ZAUBAR projects, aiming for a dependable, plug-and-play component that adds multilingual guidance, quick summaries and scene-aware answers to tours, exhibitions, events and learning spaces.

.webp)

.svg)

.svg)

.svg)

.svg)

.svg)

%201.svg)

.webp)